http://blog.kaggle.com/2018/02/07/a-brief-summary-of-the-kaggle-text-normalization-challenge/

Category: Language

The history of “drain(ing) the swamp(s)”

In US political discourse, the phrase drain the swamp(s) usually refers to fighting corruption and undue influence. But the origins of the expression are quite far from this sense. The swamps in question are the Pontine Marshes (Pomptinae Paludes) to the south of Rome. Efforts to drain them have been made, on and off, for three millennia, and even predate Roman settlement in the region. The Appian Way (Via Appia, completed in 312 BCE), a famous ancient road, traversed the swamps, and major efforts (by the senators and consuls, by the emperors, and by the medieval popes) were required to keep the roadbed above water level. And of course the swamps’ waters are infested with malarial mosquitoes. Thus it is no surprise that many a historical Roman leader used “drain the swamps!” as a political slogan.

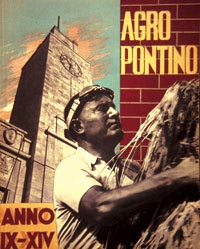

The most famous swamp drainer of all is Benito Mussolini, who tackled the marshes (now known as Agro Pontino) as part of a flashy, highly publicized infrastructure campaign. Once completed—with untold workers succumbing to malaria in the process—2,000 pro-fascist families from North Italy were granted farmsteads in former swampland. But after the Allied invasion of Sicily, the Armstice of Cassibile, and the Nazi reinforcement of Italy, the Nazis stopped the pumps and opened the dikes, flooding the marshes with brackish water. While it’s not at all clear this tactic was effective at slowing down Allied advances, it certainly did help to spread malaria (at a time when quinine was in short supply) and it utterly devastated the region’s civilian population. It was an act of biological warfare against a now-hostile civilian population no longer aligned with the Nazi cause.

Nowadays the swamp waters are relatively well-controlled, and liberal application of the pesticide DDT in the middle 20th century helped to rein in the mosquito population, and the region has largely been repopulated.

Postscript: I want to be clear that I’m not saying that “drain the swamp” is always intended to index Mussolini (or whatever), just that many well-read Westerners will likely see use of this expression as “normalizing fascism”.

A Morris Halle memory

Morris Halle passed away earlier today. Morris was an absolute giant in the field of linguistics. His work in the 1950s and 1960s completely revolutionized phonological theory. He did this, primarily, by rejecting an axiom of the previous century’s work.

The theory of phonology was so utterly transformed by his argument against the principle of biuniqueness that the very concept is rarely even taught in the 21st century.

And this was just one of his earliest scientific contributions.

I could say a lot more about Morris’s work, but instead let me tell a short anecdote. In 2010 or so I happened to be in the Boston area and my advisor kindly arranged for me to meet Morris. After getting coffee we walked to his spare shared office. The only thing of note was a single wall-mounted bookshelf containing three books: Morris’ own Sound Pattern of Russian and Sound Pattern of English—with the dust cover removed so as to exhibit the unique bas-relief cover designed by Morris’s wife, a talented visual artist—and of course, Walker’s rhyming dictionary. For whatever reason, we started to discuss Latin. Working with the legal pad, Morris first showed me a novel analysis of thematic vowels. Ignoring a few irregular (“athematic”) stems, all Latin verb stems have a characteristic final vowel: -ā- in the first conjugation, -ē- in the second, a vowel of varying quality (usually e or i) in the third, and -ī- in the fourth. In the first conjugation and most of the third conjugation, this vowel disappears in the first person singular active indicative verb, which is marked with an -ō suffix. Thus for the second conjugation verb docēre ‘teach’, we have doceō ‘I teach’, with the theme vowel preserved, and similarly for the fourth conjugation. In contrast, for the first conjugation verb amāre ‘love’, we have amō ‘I love’, with the theme vowel omitted, and similarly for the majority of the third conjugation. This much I already knew. To me it was just one of those conjugational quirks one has to memorize when learning Latin but Morris suggested that it was not necessarily so. What if, he argued, the first conjugation -ā- was deleted by a following -ō? (Certainly that rule is surface-true, except for a handful of Greek loanwords like chaos.) But what about the third conjugation? Morris suggested that he had long believed the underlying form of the third conjugation theme vowel was [+back], something like /ɨ/, and he proceeded to lay out the necessary allophonic rules, and finally a rule which deletes the first of two [+back] segments! I was floored.

I then showed him an analysis I was working on at the time. Once again ignoring a few irregulars, Latin masculines and feminine nouns of the third declension are characterized by a nominative singular suffix -s. When the verb stem is athematic and ends in a /t, d/, this consonant is deleted in the nominative singular (e.g., frons, frontis ‘forehead’). I argued that this rule ought to be extended to also target /r/ so as to account for the so-called “rhotic” stems like honōs, honōris ‘honor’ (e.g., /honōr-s/ → [honōs]). To make this work, one must write the rule so that it bleeds its own application (see here for the full analysis), and as one of several opaque rules. This is something which is possible in the rule-application framework proposed by Morris and colleagues, but which cannot be straightforwardly implemented in more recent theoretical frameworks. I must have hesitated for a moment as I was talking through this, because Morris grabbed my hand and said to me: “Young man, remember always to speak clearly and to never apologize for your rule ordering.” And then he bid me adieu.

When should we call it “terrorism”?

According to White House Press Secretary Sarah Huckabee Sanders, a recent spate of serial bombings targeting prominent African-Americans in Austin, TX, has “no apparent nexus to terrorism at this time”. I want to make a pedantic lexicographic point about the definition of terrorism (and terrorist) regarding this. There is certainly a sense of terrorism which just involves random lethal violence against civilians, and by that definition this absolutely qualifies. But, that is not the definition used by the state (or mass media). Rather, they favor an alternative sense which emphasizes the way in which the violence undermines the authority of the state. This is in fact encoded in the (deeply evil) PATRIOT Act, which defines terrorism as an attempt to “…to influence the policy of a government by intimidation or coercion; or to affect the conduct of a government by mass destruction, assassination, or kidnapping.” Let’s assume, as seems likely though by no means certain, that the bomber(s) are white supremacists targeting African-American communities. You’d be hard-pressed to argue that terrorizing people of color undermines the authority of a deeply racist society and its institutions any more than say, trafficking crack cocaine in African-American communities to support right-wing death squads abroad. Terrorizing people of color is absolutely in line with US domestic and foreign policy, and the language chosen by the White House (and parroted by the media) naturally reflects that.

Another pseudo-decipherment of the Voynich manuscript

The Voynich manuscript consists of 240 pages of text and fanciful illustrations written in an unknown script. It is first mentioned in the 16th century, then largely disappears from the record for several centuries, only to resurface in for sale in 1903. An independent carbon dating assigns a early 15th century date to the vellum, but some scholars speculate it may have been inked or re-inked at a later date. Other scholars believe it to be an elaborate hoax or forgery. A recent paper in Transactions of the Association for Computational Linguistics (TACL) by Bradley Hauer & Grzegorz Kondrak (henceforth H&K) entitled Decoding Anagrammed Texts Written in an Unknown Language was touted to have enabled a decipherment of the Voynich. Have H&K succeeded where others have failed? Unfortunately, having reviewed the paper carefully, I can say with some certainty that they they have not.

H&K propose two techniques towards decipherment. First, they describe methods to determine the underlying language of a plaintext using only the ciphertext, assuming a simple bijective substitution cipher. Their preferred method does not depend on the linear order of strings within the ciphertext, and thus works equally well when the ciphertext characters have been permuted within words (assuming that word boundaries are somehow clearly delimited in the ciphertext), a point which will be become important shortly. Then, they describe methods for cryptanalysis when the encipherment consists of a bijective substitution cipher under certain degenerate conditions, such as where the ciphertext lacks vowels, or where the ciphertext characters have been randomly permuted within words.

That much is fine (though I have some quibbles with the details, as you’ll see). My major issue with H&K is that they don’t provide any evidence that the Voynich is so encoded, they simply assume it. And, despite the press hype, their preferred method fails to produce anything remotely readable.

I don’t have much to say about their method for identifying source language; it is a relatively novel task—they only cite one prior work—and their method and evaluation both appear to be sound. I appreciate that their evaluation includes a brute force-like method of simply attempting to decipher the text as a given language, and as a topline, an “oracle” scenario in which the decipherment is known and the problem reduces to standard language ID. But I was struck by the following claim about their decipherment method (p. 79):

“We conclude that our greedy-swap algorithm strikes the right balance between accuracy and speed required for the task of cipher language identification.”

It’s hard for me to imagine in what sense “cipher language identification” might be considered something which needs to be fast (rather than merely feasible). I think, in contrast, we would be just fine with using supercomputers for this task if it worked.[1]

So what does their preferred method say about the plaintext language of the Voynich? It assigns, by far, the highest probability to Hebrew.[2,3] Naturally, the oracle scenario is inapplicable here; whereas most archaeological decipherments have worked from a small set of candidate languages for the plaintext, there is nothing like a consensus regarding the language of the Voynich.

H&K then consider methods for decipherment itself. This problem is essentially a type of unsupervised machine learning in which the objective is to identify a mapping from ciphertext to plaintext (a key) such that for the ciphertext, we maximize the probability of the plaintext with respect to some language model. Kevin Knight and colleagues have, in the last two decades, proposed three distinct applications for this scenario:

- Unsupervised translation: Knight & Graehl (1998) use this scenario to learn low-resource transliteration models, and some subsequent work has applied this to other low-resource, small-vocabulary tasks, but as of yet such methods don’t scale well to machine translation in general.

- Steampunk cryptanalysis: Knight et al. (2006) use this scenario for unknown-plaintext cryptanalysis of bijective substitution ciphers, and subsequent work has also applied this to homophonic and running key ciphers. But the aforementioned ciphers have been known to be vulnerable to pencil-and-paper attacks for a century or more, and it’s not clear that these methods are effective attacks against any cryptosystem in widespread use today.

- Archaelogical decipherment: Snyder et al. (2010) attempt to simulate the automatic decipherment of Ugaritic, a Semitic language written in a cuneiform script in the 14th through the 12th century BCE; these were manually deciphered in 1929-1931 by exploiting the language’s strong similarity to biblical Hebrew. Knight et al. (2012) show that an undeciphered 18th century manuscript is in fact a description of a Masonic ritual written in German and encoded using a homophonic cipher. However, others have argued that computational methods for archaelogical decipherment are still quite limited. For instance, Sproat (2010a,b, 2014) draws attention to the unsolved problem of determining whether a symbol system represents language in the first place, and to the long history of pseudo-decipherment.

Regardless of the application, it should be obvious that decipherment is a computationally challenging problem. Formally, given a bijective cipher over an alphabet K, the keyspace has size |K|!, since each candidate key is a permutation of K. Three classes of methods are found in the literature:

- Integer linear programming (ILP; e.g., Ravi & Knight 2010)

- A linear relaxation of the ILP to expectation maximization or related methods (e.g., Knight et al. 2006)

- Search-based techniques using a beam or tree (e.g., Hauer et al. 2014)

H&K’s preferred method is a case of the latter; they refer to this prior work as “state-of-the-art”, but skimming Hauer et al. suggests they are state-of-the-art on decrypting snippets of text randomly sampled from the English Wikipedia article “History“. This doesn’t strike me as an acceptable benchmark, even if there’s some precedent in the literature, and what’s worse is that they use snippets as short as two characters, which are well below the well-known theoretical bound.

H&K propose two adaptations of the Hauer et al. model. First, they consider a variant which can handle ciphertext in which characters have been permuted (“anagrammed”) within words (assuming that word boundaries are clearly delimited in the ciphertext and are the same as word boundaries in the plaintext). H&K they mention that this has been suggested in prior Voynichology—though this might well be pure speculation, since we can’t read the manuscript—but do not themselves argue that the Voynich is anagrammed. Random permutation of letters within words strikes me as a poor cryptographic strategy due to the non-determinism it introduces. Rof nnastcie anc uyo adre shti eetnencs? That’s hard to read, in my opinion, though not complete impossible with enough context.[4] While I can’t really put myself into the mind of the creators of the Voynich manuscript, it seems that a wide degree of hermeneutic freedom is undesirable in most written genres, even texts of, say, an occult nature: you don’t want to accidentally turn yourself into a newt! Secondly, H&K adapt their model so that it can restore vowels omitted in the plaintext.[5] They refer to the resulting ciphertext with vowels omitted as an “abjad”, using a rare term of art for consonantal writing systems, i.e., those in which vowels are omitted. Phoenician, the ancestor of the Greek & Latin alphabets, did not originally write vowels at all, but they are inconsistently present in later texts and both Hebrew & Arabic write certain vowels. In Standard Arabic, for example, all long vowels are written explicitly, and Hebrew during the Renaissance era was normally written with the Tiberian diacriticization (or niqqud) developed several centuries earlier. H&K seem to be assuming a total omission of vowels which would be both anachronistic and typologically rare, and had H&K mentioned either of those facts in a brief disclaimer admitting to their slight abuse of terminology, I’d wouldn’t think they weren’t mislead, or misleading the reader, about what an abjad (normally) is.

It seems to me that H&K have, at this point, taken a method-free leap of faith towards the hypothesis that the Voynich is vowel-less Hebrew, anagrammed and encoded with a bijective substitution cipher. Perhaps I’d be willing to forgive it if these assumptions allowed them to produce some readable plaintext. Here’s what they have to say about that (p. 84):

“None of the decipherments appear to be syntactically correct or semantically consistent. […] The first line of the VMS [Voynich manuscript]…is deciphered into Hebrew as ועשה לה הכה איש אליו לביחו ו עלי אנשי המצות. According to a native speaker of the language, this is not quite a coherent sentence. However, after making a couple of spelling corrections, Google Translate is able to convert it into passable English: ‘She made recommendations to the priest, man of the house and me and people.'”

So the authors, neither of whom apparently are native speakers of Hebrew, post-edited the output of their system until the MT decoder produced this sentence. As others have noted, this is not an acceptable method—modern MT systems are extremely good at producing locally coherent text from degenerate input.

H&K suggest two possible interpretations of their results: “the results presented in this section could be interpreted either as tantalizing clues for Hebrew as the source language of the VMS, or simply as artifacts of the combinatoric power of anagramming and language models.” (p. 84f.) So they are not really claiming, at least in this article, a decipherment—that’s an addition of the subsequent, irresponsible press coverage, for which I can’t really blame H&K—but I can’t imagine calling this “tantalizing”. I don’t see any reason to think H&K have any confidence in their decipherment, either: they don’t provide more than a single plaintext sentence, and don’t provide a key. Had I been asked to review this paper, I would have requested that the portion of the paper dealing with language identification employ corpora of non-linguistic symbol systems (such as those in Sproat 2014), and I would have insisted that the portion of the paper dealing with the decipherment of the Voynich be essentially scrapped. The Voynich angle is a red herring: there is nothing here. Had they just removed it, this would have been a perfectly good TACL paper!

In 2010, my colleague Richard Sproat wrote a brief article for the journal Computational Linguistics (Sproat 2010b) which reviewed a recent paper by Rao et al. (2009), published in the journal Science. Rao et al. claim to provide statistical evidence that the the Indus Valley seals are a writing system. Now there are quite a few reasons to suspect the seals are not writing under any common-sense definition thereof. More importantly, though, Rao et al.’s method fails to discriminate between linguistic and non-linguistic symbol systems (see, e.g., Sproat 2014). Sproat implies that had the Science editors simply retained computational linguists as referees, they would have been made aware of the manifest flaws of Rao et al.’s paper and would thus have rejected it. With respect to my colleague, he has been shown wrong on both counts. First, when these journals retain computational linguist referees, they simply ignore negative reviews of technically-flawed, linguistically-oriented work when it has sufficient “woo factor”. Secondly, woo factor trumps lack of method even in the one of the top journals for computational linguistics and natural language processing, one which I review for and publish in. Some recent research suggests that fanciful university press releases are a key contributor to scientific hype. As far as I can tell, that is what happened here: the “tantalizing clues” in a flawed journal article were wildly exaggerated by the University of Alberta press office, and major publications took the press release at its hyperbolic word.

PS: If you’re interested in more wild speculation about the Voynich manuscript, may I suggest you check out @voynich_bot on Twitter?

Acknowledgements

Thanks to Brian Roark & Richard Sproat for feedback on this.

Endnotes

[1] The hacks at the Daily Mail are rather confused here; Carmel isn’t a supercomputer—it’s a free software package for doing expectation maximization over finite-state transducers—and at worst you might want to run these kinds of experiments using a top-of-the-line microcomputer, possibly with a powerful graphics card (e.g., Berg-Kirkpatrick & Klein 2013).

[2] An alternative method prefers Mazatec, a which H&K correctly reject as chronologically implausible; a couple other top possibilities are Mozarabic, Italian, and Ladino, which H&K consider “plausible”. Mozarabic is an extinct Romance language that was spoken (but only rarely written) by Christians living in Moorish Spain; it is unclear whether H&K are using the Arabic or the Roman orthography (neither were really standard). Ladino was spoken in the same region and time period but by the Sephardic population; it was written using Hebrew characters. As far as I know, both languages would have declined rapidly after the conclusion of the Reconquista, which imposes a terminus ante quem of roughly 1492, if either is the plaintext language of the Voynich.

[3] For reasons unclear to me they only use 43 pages of the manuscript in their Voynich experiments. This seems like a major flaw to me. Had I been asked to review this paper, I would requested a justification.

[4] To wit, in the CMU dictionary, 17% of six-character words are an anagram of at least one other word, and there are no less than fifteen anagrams of the sequence AEIMNR.

[5] H&K claim that one can’t use the linear relaxation method to restore vowels. I don’t see why, though. If the hypothesis space is expressed as a single-state weighted finite-state transducer, and the plaintext vowels are simply mapped to epsilon, then everything proceeds as normal. In fact I am running such an experiment with a ciphertext consisting of an “abjad” (no-vowel) rendering of the Gettysburg Address. I use a variant of the Knight et al. (2006) approach with Baum-Welch training and forward-backward decoding rather than their Viterbi approximations (software here). Because the resulting lattice is cyclic, the shortest-distance computation during the E-step is more complex than normal, but it does basically work. This is to be expected: you prbbly hv lttl trbl rdng txt tht lks lk ths. Experimental results forthcoming.

References

Berg-Kirkpatrick, Taylor; Klein, Dan. 2013. Decipherment with a million random restarts. In EMNLP, pages 874-878.

Hauer, Bradley; Hayward, Ryan; Kondrak, Grzegorz. 2014. Solving substitution ciphers with combined language models. In COLING, pages 2314-2325.

Hauer, Bradley; Kondrak, Grzegorz. 2016. Decoding anagrammed texts written in an unknown language. Transactions of the Association For Computational Linguistics 4: 75-86.

Knight, Kevin; Graehl, Jonathan. 1998. Machine transliteration. Computational Linguistics 24(4): 599-612.

Knight, Kevin; Nair, Anish; Rathod, Nishi; Yamada, Kenji. 2006. Unsupervised analysis for decipherment problems. In COLING, pages 499-506.

Knight, Kevin; Megyesi, Beáta; Schaefer, Christiane. 2012. The secrets of the Copiale cipher. Journal for Research into Freemasonry 2(2): 314-324.

Ravi, Sujith; Knight, Kevin. 2008. Attacking decipherment problems optimally with low-order n-gram models. In EMNLP, pages 812-819.

Rao, Rajesh; Yadav, Nisha; Vahia, Mayank; Joglekar, Hrishikesh; Adhikari, R.; Mahadevan, Iravatham. 2009. Entropic evidence for linguistic structure in the Indus script. Science 342(5931): 1165.

Snyder, Ben; Barzilay, Regina; Knight, Kevin. 2010. A statistical model for lost language decipherment. In ACL, pages 1048-1057.

Sproat, Richard. 2010a. Language, Technology, and Society. Oxford: Oxford University Press.

Sproat, Richard. 2010b. Ancient symbols, computational linguistics, and the reviewing practices of the general science journals. Computational Linguistics 36(3): 585-594.

Sproat, Richard. 2014. A statistical comparison of written language and nonlinguistic symbol systems. Language 90(2): 457-481.

Libfix report for February 2018

- While -splain (and -splainer, -splaining) clearly have potential, they hadn’t, as far as I could tell, gotten much beyond mansplain and occasionally, womansplain. But I changed my mind once I saw a podcast episode entitled “Orbsplainer“, about, well, the orb, you remember the orb, right? How could you forget the Orb? The Orb forbids it! Anyways, looks like a libfix to me.

- Constantine Lignos draws my attention to -tainment, a term which refers to media (particularly video and video games) which entertains in addition while doing something else. The locus classicus is the ’90s term edutainment, which looks much more like a blend than a libfix, as does infotainment, politainment, and psychotainment. But but pornotainment suggests this is on its way to affix liberation.

What to do about the academic brain drain

The academy-to-industry brain drain is very real. What can we do about it?

Before I begin, let me confess my biases. I work in the research division of a large tech company (and I do not represent their views). Before that, I worked on grant-funded research in the academy. I work on speech and language technologies, and I’ll largely confine my comments to that area.

[Content warnings: organized labor, name-calling.]

Salary

Fact of the matter is, industry salaries are determined by a relatively-efficient labor market. Academy salaries are compressed, with a relatively firm ceiling for all but a handful of “rock star” faculty. The vast majority of technical faculty are paid substantially less than they’d make if they just took the very next industry offer that came around. It’s even worse for research professors who depend on grant-based “salary support” in a time of unprecedented “austerity”—they can find themselves functionally unemployed any time a pack of incurious morons seem to end up in the White House (as seems to happen every eight years or so).

The solution here is political. Fund the damn NIH and NSF. Double—no, triple—their funding. Pay for it by taxing corporations and the rich, or, better yet, divert some money from the Giant Death Machines fund. Make grant support contractual, so PIs with a five-year grant are guaranteed five years of salary support and a chance to realize their vision. Insist on transparency and consistency in “indirect costs” (i.e., overhead) for grants to drain the bureaucratic swamp (more on that below). Resist the casualization of labor at universities, and do so at every level. Unionize every employee at every American university. Aggressively lobby Democrat presidential candidates to agree to appoint the National Labor Relations Board who will continue to recognize graduate students’ right to unionize.

Administration & bureaucracy

Industry has bureaucratic hurdles, of course, but they’re in no way comparable to the profound dysfunction taken for granted in the academic bureaucracy. If you or anyone you love has ever written a scientific grant, you know what I mean; if not, find a colleague who has and politely ask them to tell you their story. At the same time American universities are cutting their labor costs through casualization, they are massively increasing their administrative costs. You will not be surprised to find that this does not produce better scientific outcomes, or make it easier to submit a grant. This is a case of what Noam Chomsky has described as the “neoliberal confidence trick”. It goes a little something like this:

- Appoint/anoint all-powerful administrators/bureaucrats, selecting for maximal incompetence.

- Permit them to fail.

- Either GOTO #1, or use this to justify cutting investment in whatever was being administered in the first place.

I do not see any way out of this situation except class consciousness and labor organizing. Academic researchers must start seeing the administration as potentially hostile to their interests, and refuse to identify with, or (or quelle horreur, to join) the managerial classes.

Computing power & data

The big companies have more computers than universities. But in my area, speech and language technology, nearly everything worth doing can still be done with a commodity cluster (like you’d find in the average American CS departments) or a powerful desktop with a big GPU. And of those, the majority can still be done on a cheap laptop. (Unless, of course, you’re one of those deep learning eliminationist true believers, in which case, reconsider.) Quite a bit of great speech & language research—in particular, work on machine translation—has come from collaborations between the Giant Death Machines funding agencies (like DARPA) and academics, with the former usually footing the bill for computing and data (usually bought from the Linguistic Data Consortium (LDC), itself essentially a collaboration between the military-industrial complex and the Ivy League). In speech recognition, there are hundreds of hours of transcribed speech in the public domain, and hundreds more can be obtained with a LDC contract paid for by your funders. In natural language processing, it is by now almost gauche for published research to make use of proprietary data, possibly excepting the venerable Penn Treebank.

I feel the data-and-computing issue is largely a myth. I do not know where it got started, though maybe it’s this bizarre press-release-masquerading-as-an-article (and note that’s actually about leaving one megacorp for another).

Talent & culture

Movements between academy & industry have historically been cyclic. World War II and the military-industrial-consumer boom that followed siphoned off a lot of academic talent. In speech & language technologies, the Bell breakup and the resulting fragmentation of Bell Labs pushed talent back to the academy in the 1980s and 1990s; the balance began to shift back to Silicon Valley about a decade ago.

There’s something to be said for “game knows game”—i.e., the talented want to work with the talented. And there’s a more general factor—large industrial organizations engage in careful “cultural design” to keep talent happy in ways that go beyond compensation and fringe benefits. (For instance, see Fergus Henderson’s description of engineering practices at Google.) But I think it’s important to understand this as a symptom of the problem, a lagging indicator, and as part of an unpredictable cycle, not as something to optimize for.

Closing thoughts

I’m a firm believer in “you do you”. But I do have one bit of specific advice for scientists in academia: don’t pay so much damn attention to Silicon Valley. Now, if you’re training students—and you’re doing it with the full knowledge that few of them will ever be able to work in the academy, as you should—you should educate yourself and your students to prepare for this reality. Set up a little industrial advisory board, coordinate interview training, talk with hiring managers, adopt industrial engineering practices. But, do not let Silicon Valley dictate your research program. Do not let Silicon Valley tell you how many GPUs you need, or that you need GPUs at all. Do not believe the hype. Remember always that what works for a few-dozen crypto-feudo-fascisto-libertario-utopio-futurist billionaires from California may not work for you. Please, let the academy once again be a refuge from neoliberalism, capitalism, imperialism, and war. America has never needed you more than we do right now.

If you enjoyed this, you might enjoy my paper, with Richard Sproat, on an important NLP task that neural nets are really bad at.

Disfluency in children with ASD and SLI

Our new article on disfluency in children with autism spectrum disorders (ASD) or specific language impairment (SLI) is now out in PLOS ONE. (The team consisted of Heather MacFarlane—who also did most of the annotation and much of the writing—myself, and Rosemary Ingham, Alison Presmanes Hill, Katina Papadakis, Géza Kiss, and Jan van Santen.)

There is a long-standing clinical impression that children with ASD are in some ways more disfluent than typically developing children, something likely related to their general difficulties with the set of abilities known as pragmatic language. We found that the few prior attempts to quantify this impression were difficult to interpret, and in some cases, put forth contradictory findings. One limitation that we observed in the prior work (other than poor controls and small samples, which one more or less expects in this area) is the lack of a well-thought-out schema for talking about different kinds of disfluency. While specialists in disfluency have largely operated “under the hypothesis that different types of disfluency manifest from different types of processing breakdowns”, so it is valuable to have a taxonomy of the types of disfluency so as to know what to count. Thus one of our goals in the paper is to adapt—to simplify, really—the schema used by Elizabeth Shriberg (in her 1995 UC Berkeley dissertation) and show that semi-skilled transcribers can achieve high rates of interannotator agreement using our schema. (We also show that much of the annotation can be automated, if one so chooses, and provide code for that.) Of course, we are even more interested in what we can learn about pragmatic language in children with ASD from our efforts at quantifying disfluency.

In in sample of 110 children with ASD, SLI, or typical development, we find two robust results. First, we found that children with ASD produced a higher ratio of content mazes (repetitions, revisions, and false starts) to fillers (e.g., uh, um) compared to their typically developing peers. Secondly, we found that children with ASD produced lower ratios of cued mazes—that is, content mazes that contain a filler—than their typically developing peers. We also found a suggestive result in a follow-up exploratory analysis: the use of cued mazes is positively correlated with chronological age in typically developing children (but not in children with ASD or SLI), which at least hints at a maturational account.

If you have anything to add, please feel free to leave post-publication comments at the PLOS one website.

Classifying paraphasias with NLP

I’m excited about our new article in the American Journal of Speech-Language Pathology (with Gerasimos Fergadiotis and Steven Bedrick) on automatic classification of paraphasias using basic natural language processing techniques.

Paraphasias are speech errors associated with aphasia. Roughly speaking, these errors may be phonologically similar to the target (dog for the target LOG) or dissimilar. They also may be semantically similar to the target (dog for the target CAT), or both (rat for the target CAT). Finally, they may be neologisms (tat for the target CAT). Finally, some paraphasias may be real words but neither phonologically nor semantically similar. The relative frequencies of these types of errors differ between people with aphasia. These can be measured in a confrontation naming task and, with complex and time-consuming manual error classification, used to create individualized profiles for treatment.

In the paper, we take archival data from a confrontation naming task and attempt to automate the classification of paraphasias. To quantify phonological similarity, we automate a series of baroque rules. To quantify semantic similarity, we use a computational model of semantic similarity (namely cosine similarity with word2vec embeddings). And, to identify neologisms, we use frequency in the SUBTLEX-US corpus. The results suggest that test scoring can in fact be automated with performance close to that of human annotators. With advances in speech recognition, it may soon be possible to develop a fully-automated computer-adaptive confrontation naming task in the near future!

Evaluating machine translation quality with BLEU

I wrote this quite a while ago, but here’s my handout on BLEU, a metric used to evaluate machine translation systems. Everything here is still just as applicable in the era of neural machine translation.